3. Unintentional Collateral Damage During Optimization Efforts

A page has the potential to rank for multiple keywords.

Finding the balance between the right content, the right target keywords, and the right optimization efforts is a challenge.

As an SEO practitioner, the following scenarios may seem familiar to you:

- A website will contain multiple pages covering the same topical theme, with external backlinks and target keywords distributed across these pages and the best-quality links not optimized for the right target keywords.

- A site undergoes a rebuild or redesign that negatively impacts SEO.

- Conflicts of interest arise between various business units when it comes to optimization priorities. Without a mechanism to identify which optimization efforts will have the greatest impact on search rankings and business outcomes, it is hard to make a business case for one optimization strategy over another.

4. The Unreliability of Standard CTR Benchmarks

There is significant uncertainty in the number of click-throughs that each URL will receive at different positions on the search engine results page (SERP).

This is because the click-through rate (CTR) a page generates is a function of multiple elements in the SERP layout, including:

- The relative position of the URL on the SERP for a specific keyword.

- The number of ads above the organic results for the target keyword.

- The packs that are displayed (answer box, local pack, brand pack, etc.).

- Display of thumbnails (images, videos, reviews, rating scores, etc.).

- Brand association of the user to the brand.

Calculating CTR by rank position is just one measurement challenge.

The true business impact of SEO is also hard to capture, due to the difficulty identifying the conversion rate that a page will generate and the imputed value of each conversion.

Search professionals must have strong analytical skills to compute these metrics.

5. Inability to Build a Business Case for Further Investments into Data Science

When making investment decisions, business stakeholders want to understand the impact of individual initiatives on business outcomes.

If an initiative can be quantified, it is easier to get the necessary level of investment and prioritize the work.

This is where SEO often struggles. Business leaders find SEO efforts to be iterative and unending, while search practitioners fall short in trying to correlate rank with impact on traffic, conversions, leads, and revenue.

The ROI of SEO can seem minimal to leadership when compared to the more predictable, measurable and immediate results produced by other channels.

A further complication is the investment and resources required to set up data science processes in-house to start solving for SEO predictability.

The skills, the people, the scoring models, the culture: the challenges are daunting.

Making SEO Predictable: The Need for Scoring Models

Now that we’ve established the path to predictability is one fraught with challenges, let us go back to my initial question.

Can SEO be made predictable?

Is there value in investing to make SEO predictability a reality?

The short answer: yes!

At iQuanti, our dedicated data science team has approached solving for SEO predictability in three steps:

- Step 1: Define metrics that are indicative of SEO success and integrate comprehensive data from the best sources into a single warehouse.

- Step 2: Reverse engineer Google’s search results by developing scoring models and machine learning algorithms for relevancy, authority, and accessibility signals.

- Step 3: Use outputs from the algorithm to enable specific and actionable insights into page/site performance and develop simulative capabilities to enable testing a strategy (like adding a backlink or making a content change) before pushing to production – thus making SEO predictable.

Step 1: Identification of Critical Variables & Data Integration

As mentioned before, one of the major roadblocks to SEO success is the inability to integrate all necessary metrics in one place.

SEO teams use a myriad of tools and browser extensions to gather performance data – both their own, and comparative/competitive data as well.

What most enterprise SEO platforms fail at, however, is making all the SEO variables and metrics for any particular keyword or page accessible in one view.

This is the first and most critical step. And while it requires access to the various SEO tools and basic data warehousing capabilities, this essential first step is comparatively easier to bring to life in practice.

We haven’t yet entered the skill- and resource-heavy data modeling phase, but with the right data analytics team in place, the integration of data itself could prove to be a valuable first step toward SEO predictability.

How?

Let me explain with an example.

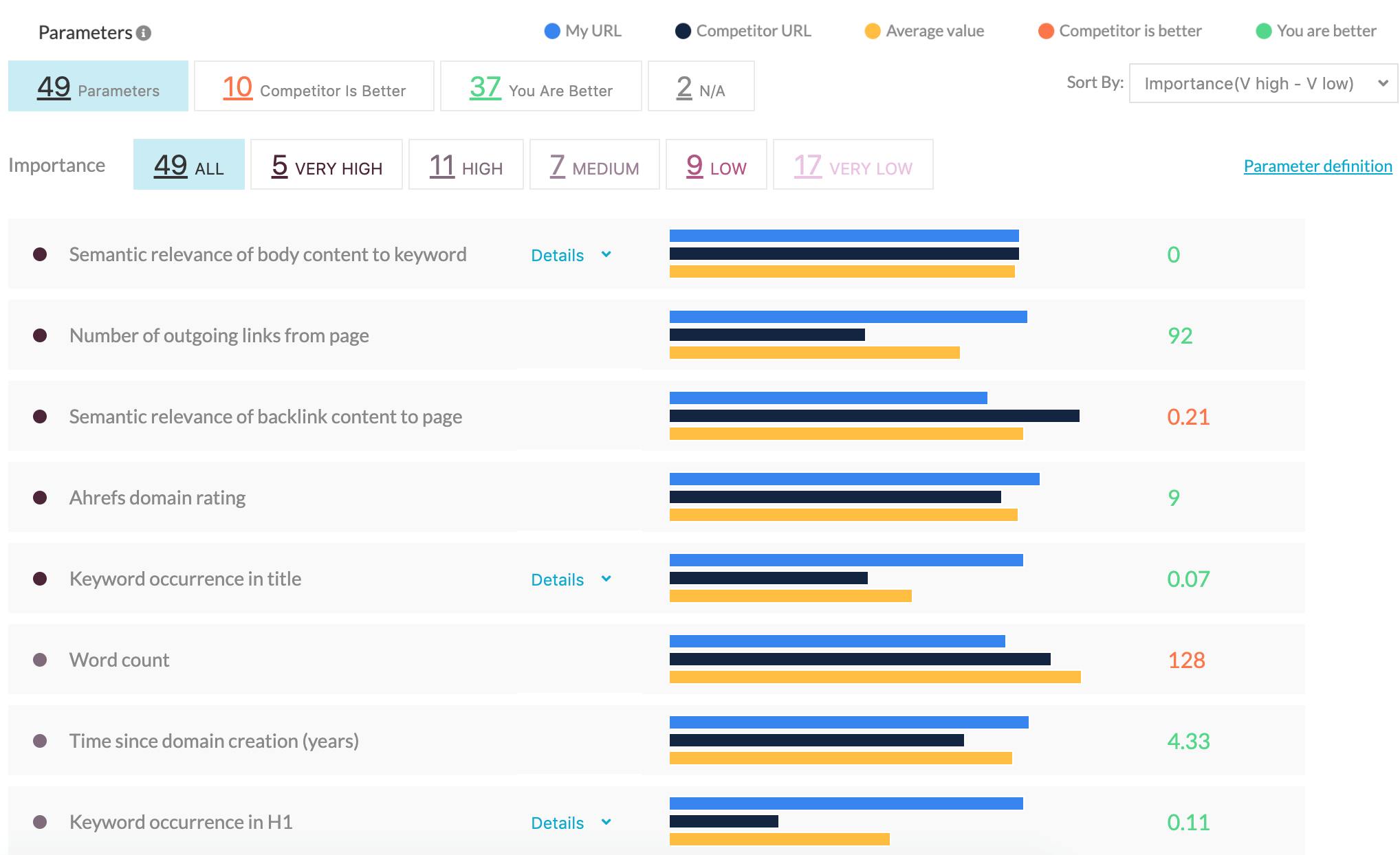

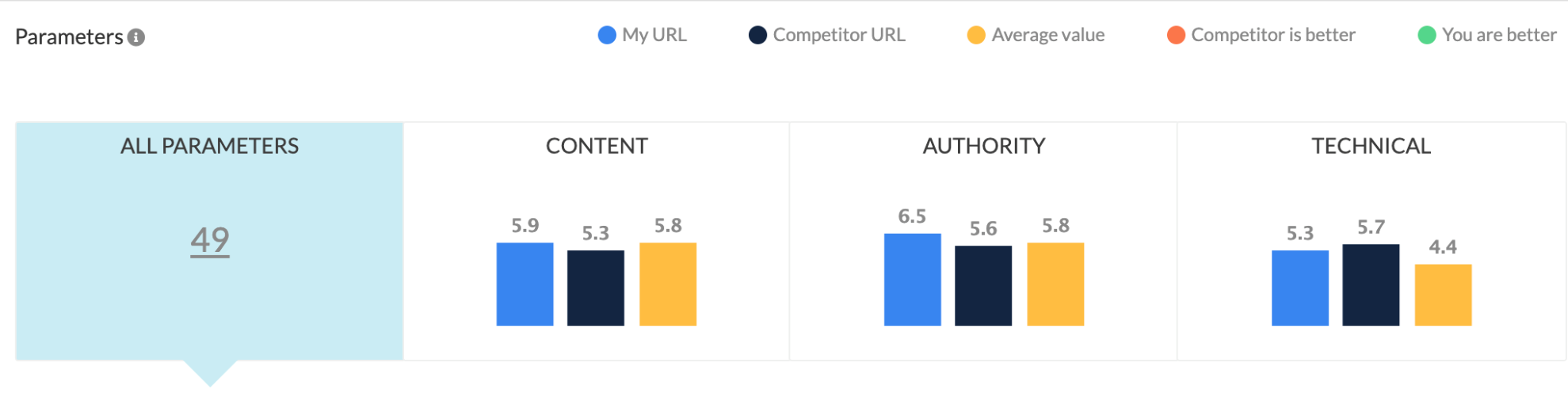

If you are able to bring together all SEO metrics for your URL www.example.com with an understanding of the value of each metric, it becomes easy to build a simple comparative scoring model allowing you to benchmark your URL against the top-performing URLs in search. See below.

PRO TIPS: For text data (or content), consider a mix of the following variables:

For link data, consider the:

|

Automate this, and you have at your disposal, a reliable and continuous benchmarking process. Every time you implement changes toward optimization, you can actually see (and measure) the needle moving on SERPs.

Tracking your score and its components over a period of time can provide insights into the tactics deployed by competitors (e.g., whether they are improving page relevancy or aggressively building authority) and the corresponding counter-movements to ensure that your site is consistently competing at a high level.

Step 2: Building Algorithmic Scoring Models

Search rankings reflect the collective effect of multiple variables all at once.

To understand the impact of any single variable on rankings, we should ensure that all other parameters are kept constant as this isolated variable changes.

Then, to arrive at a “score,” there are two ways to develop a modeling problem:

- As a classification problem [good vs. not good]

- In this approach, you need to label all top-10-ranked URLs (i.e., those on the first SERP) as 1 and the rest as 0 and try to understand/reverse engineer how different variables contribute to the URL being in the top page.

- As a ranking problem

- In this approach, the rank is considered as the continuous metric and the models understand the importance of variables to rank higher or lower.

Creating such an environment where we can identify the individual and collective effects of multiple variables requires a massive corpus of data.

While there are hundreds of variables that search engines take into consideration for ranking pages, they can broadly be classified into content (on-page), authority (off-page) and technical parameters.

I propose focusing on developing a scoring model that helps you assign and measure scores across these four elements:

1. Relevance Score

This score should review on-page content elements, including:

- The relevance of the page’s main content when compared to the targeted search keyword.

- How well the page’s content signals are communicated by marked-up elements of the page (e.g., title, H1, H2, image-alt-txt, meta description etc.).

2. Authority Score

This should capture the signals of authority, including:

- The number of inbound links to the page.

- The level of quality of sites that are providing these links.

- The context in which these links are given.

- If the context is relevant to the target page and the query.

3. Accessibility Score

This should capture all the technical parameters of the site that are required for a good experience – crawlability of the page, page load times, canonical tags, geo settings of the page, etc.

4. CTR Algorithm/Curve

The CTR depends on various factors like keyword demand, industry, whether the keyword is a brand name and the layout of the SERP (i.e., whether the SERP includes an answer box, videos, images, or news content.)

The objective here is to determine the estimated click-through rate for each ranking position, granting SEO professionals knowledge of how each keyword contributes to the overall page traffic.

This makes it easier for the SEO program to monitor the most important keywords.

If you can compare these three sub-scores and underlying attributes, you would be able to clearly identify the reasons for the lack of performance – whether the target page is not relevant enough or whether the site does not have enough authority in the topic or if there is anything in the technical experience that is stopping the page from ranking.

It will also pinpoint the exact attributes that are causing this gap to provide specific actionable insights for content teams to address.

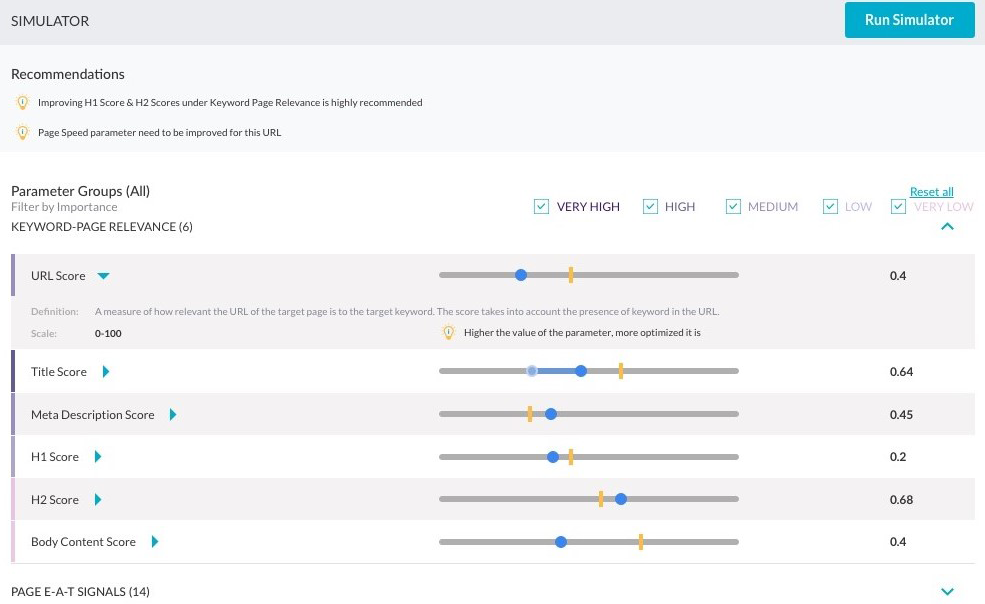

Step 3: Strategy & Simulation

An ideal system would go one step further to enable the development of an environment where SEO pros can not only uncover actionable insights, but also simulate proposed changes by assessing impact before actually implementing the changes in the live environment.

The ability to simulate changes and assess impact builds predictability into the results. The potential applications of such simulative capabilities are huge in an SEO program.

1. Predictability in Planning and Prioritization

Resources and budgets are always limited. Defining where to apply optimization efforts to get the best bang for your buck is a challenge.

A predictive model can calculate the gap between your pages and the top-ranking pages for all the keywords in your brand vertical.

The extent of this gap, the resources required to close it and the potential traffic that can be earned at various ranks can help prioritize your short-, medium- and long-term optimization efforts.

2. Predictability in Ranking and Traffic Through Content, Authority, and Accessibility Simulation

A content simulation module will allow for content changes to be simulated and the resulting improvement in relevance scores – as well as any potential gains in ranking – to be estimated.

With this kind of simulation tool, users can focus on improving poorly performing attributes and protect the page elements that are driving ranks and traffic.

A simulation environment could grant users the ability to test hypothetical optimization tactics (e.g., updated backlinks and technical parameters) and predict the impact of these changes.

SEO professionals could then make informed choices about which changes to implement to drive improvements in performance while protecting any existing high-performing page elements.

3. Predictability in the Business Impact of SEO Efforts

SEO professionals would be able to use the model to figure out whether their change is having any bottom-line impact.

At any given or predicted rank, SEO pros can use the CTR curve to figure out what kind of click-throughs the domain may receive at a particular position.

Integrating this with website analytics and conversion rate data allows conversions to be tied to search ranking – thus forecasting the business impact of your SEO efforts in terms of conversions or revenue.

The Final Word

There is no one-size-fits-all when it comes to developing SEO scoring models. My attempt has been to give a high-level view of what is possible.

If you are able to capture data at its most granular level, you can aggregate it the way you want.

This is our experience at iQuanti: once you set out on this journey, you’ll have more questions, figure out new solutions, and develop new ways to use this data for your own use cases.

You may start with simple linear models but soon elevate their accuracy. You may consider non-linear models, ensembles of different models, models for different categories of keywords – high volume, long tail, by industry category, and so on.

Even if you are not able to build these algorithms, I still see value in this exercise.

If only a few SEO professionals get excited by the power of data to help build predictability, it can change the way we approach search optimization altogether.

You’ll start to bring in more data to your day-to-day SEO activities and begin thinking about SEO as a quantitative exercise — measurable, reportable, and predictable.