July 20, 2017

At the beginning of the year, Google released a new feature called IF function ads. We wrote about this new feature in the post How To Use AdWords Ads IF Functionality. If you’re curious about how these customizable ads perform, here are some answers.

What Are IF Functions?

IF function ads allow you to set specific guidelines based on audience or device data. If the guidelines are met the ad will show uniquely tailored messaging. If the guidelines are not met the ads will show default messaging. For example, you can show a specific message to mobile users vs. a default message to desktop users.

Let’s Talk Numbers

I work on an account where a bulk of the traffic is mobile but performance often lags behind desktop. When the IF function ads rolled out and offered the ability to tailor ad copy to mobile users, I was eager to test the functionality.

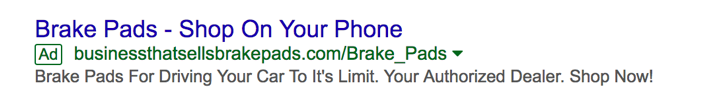

To better speak to users shopping on mobile devices I tested IF function ads, serving shoppers on a mobile device with this message:

Any shopper not on a mobile device would see this message:

I tested the ad copy in one campaign with a good amount of traffic. Looking at the device breakdown of this campaign, mobile accounted for 74% of traffic, computers for 23%, and tablet for 3%. As mobile is the main driver of traffic, the challenge is bridging the gap from traffic to purchase.

This ecommerce account is in the automotive industry which lends itself to a higher price tag. It presents a challenge for mobile as people are less likely to complete a pricey transaction on their mobile device. So, did IF function ads targeted toward mobile users influence mobile shoppers?

Mobile Performance

Only looking at the overall device breakdown of the campaign where the IF functions ads were tested, mobile made up about 54% of the conversions at a slightly higher CPA. Computers made up about 43% of the conversions at a lower CPA. Mobile was pulling over half of the conversions. Did the IF function ads have an impact on performance?

I compared standard, expanded text ads to the dynamic IF function ads broken down by device to determine which type of ad was performing best on mobile. Isolating out computers, IF function ads perform slightly better than expanded text ads. The IF ads converted 27 times compared to the ETA’s 19 at a CPA of $37.04 compared to the ETA’s of $50.69. All signs point to a better performance of the dynamic IF function ads on desktop. What I did find interesting is that on computers the IF function ads have the same ad copy as the expanded text ads. Both show the same headline 2 of “Race Ready Components.”

Comparing the static expanded text ads to the IF functions ads tells a different story on mobile. IF ads had 8 fewer conversions at a CPA of $78.32 compared to ETA’s $70.74 CPA. The one metric that performed better on mobile was CTR. This is likely because of the messaging on mobile, “Shop On Your Phone” ensures shoppers that they can indeed shop these products on a mobile device. However, if I were only to look at conversions and CPA, I would assume that the IF function ads did not perform well on mobile.

There are a few things to take into account when looking at mobile performance. As I was running this ad test, mobile CPA continued to climb. In an effort to meet client goals I needed to pull back on mobile. Around the first week of this ad copy test I bid down on mobile, perhaps causing CPA to climb. So, the higher CPA may not solely be at the blame of IF function ads. I would recommend that when testing these ads ensure that you are well within your goals to help eliminate necessary but unexpected device bid changes.

The question of why desktop saw an increase in performance metrics is still a bit of a mystery. One potential answer is that Google favors the dynamic ads compared to the static nature of expanded text ads. Perhaps in a push to further automate the PPC landscape, the system is set up to favor dynamic ads? Only further testing would help answer this question.

IF Ads vs. Expanded Text Ads

This analysis led me to further investigate IF function ad performance in relation to expanded text ad performance. Were they truly performing better than the tried and true expanded text ad?

The IF function ads had an 11.11% difference in conversions and a 50.69% drop in CPA. CTR for the IF function ads saw a lift of 9.44%. Whether this data is a result of dynamic ads being favored or not, it does show that these ads are worth including in your testing cycle.

What Should You Do?

Regardless of which ad Google is playing favorites with or even if mobile performance saw a boost, ad testing is imperative. You could get your ad in front of the right audience 100% of the time but if you don’t know how to communicate with them, you’ll never get anywhere. This is why ad testing is so important. It’s also important to evolve your ad testing as new features become available. As the digital space becomes increasingly competitive it is necessary to learn how to communicate well with your audience.

One other note on IF function ads is that they don’t have to be mobile specific. Another functionality is the ability to target specific audiences. For example, you can target cart abandoners with copy such as, “Your Cart Is Ready.”

These IF function ads allow you to get granular and specific with your ad copy. They are definitely worth testing. So, what are you waiting for? Give those IF function ads a whirl.