A preprint study published by researchers at George Washington University presents evidence of social bias in the algorithms ride-sharing startups like Uber, Lyft, and Via use to price fares. In a large-scale fairness analysis of Chicago-area ride-hailing samples — made in conjunction with the U.S. Census Bureau’s American Community Survey (ACS) data — metrics from tens of millions of rides indicate ethnicity, age, housing prices, and education influence the dynamic fare pricing models used by ride-hailing apps.

The idea that dynamic algorithmic pricing disproportionately — if unintentionally — affects certain demographics is not new. In 2015, a model used by the Princeton Review was found to be twice as likely to charge Asian Americans higher test-preparation prices than other customers, regardless of income. As the use of algorithmic dynamic pricing proliferates in other domains, the authors of this study argue it’s crucial that unintended consequences — like racially based disparities — are identified and accounted for.

“When machine learning is applied to social data, the algorithms learn the statistical regularities of the historical injustices and social biases embedded in these data sets,” paper coauthors assistant professor Aylin Caliskan and Ph.D. candidate Akshat Pandey told VentureBeat via email. “With the starting point that machine learning models trained on social data contain biases, we wanted to explore if … [the] algorithmic ride-hailing data set exhibits any social biases.”

Caliskan and Pandey chose to analyze data from the city of Chicago because of a recently adopted law requiring ride-hailing apps to disclose fare prices. The city’s database includes not only fares but coarse pickup and drop-off locations, which the researchers correlated with data from the ACS, an annual survey that collects demographic statistics about U.S. residents.

The Chicago ride-hailing corpus comprises data from over 100 million rides between November 2018 and December 2019, along with taxi data for over 19 million rides. In addition to information about the time (rounded to the nearest 15 minutes) and charges (rounded to the nearest $2.50) for each ride, it contains the location in the form of ACS census tracts, or subdivisions of a county designated by the Census Bureau consisting of about 1,200 to 8,000 people.

Filtering out samples with missing data and shared rides netted data for roughly 68 million Uber, Lyft, and Via rides. (While the corpus doesn’t distinguish between ride-hailing providers, prior estimates peg Uber’s, Lyft’s, and Via’s Chicago market shares at 72%, 27%, and 1%, respectively.) Using Iterative Effect-Size Bias (IESB), a statistical method Caliskan and Pandey propose for quantifying bias, they calculated a bias score on the fares per mile for ethnicity, age, education level, citizenship status, and median house price in Chicago.

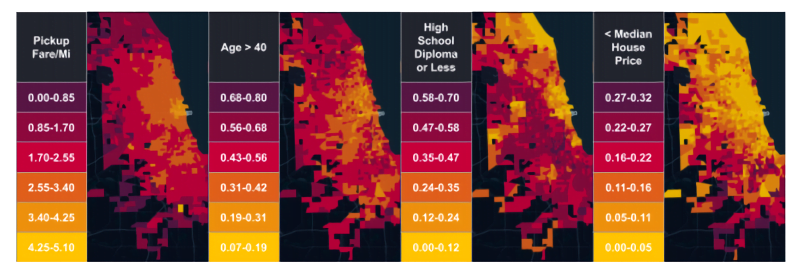

Above: City of Chicago ride-hailing data. The colors in each chart designate the average fare price per mile for each census tract.

The coauthors report an increase in ride-hailing prices when riders were picked up or dropped off in neighborhoods with a low percentage of (1) people over the age of 40, (2) people with a high school education or less, and (3) houses priced under the median for Chicago. Separately, they found that fares tended to be higher for drop-offs in Chicago neighborhoods with high non-white populations.

“Our findings imply that using dynamic pricing can lead to biases based on the demographics of neighborhoods where ride-hailing is most popular,” Caliskan and Pandey said. “If neighborhoods with more young people use ride-hailing applications more, getting picked up or dropped off in those neighborhoods will cost more, as in our findings for the city of Chicago.”

While the analysis covered only data from Chicago ride-hailing trips, Caliskan and Pandey don’t rule out the possibility that the trend could be widespread. They refer to an earlier report published in October 2016 by the National Bureau of Economic Research that found in the cities of Boston and Seattle male riders with African American names were 3 times more likely to have rides canceled and wait as much as 35% longer for rides. Another study coauthored by researchers at Northeastern University found that users standing only a few meters apart might receive dramatically different fare prices.

When contacted for comment, Uber said it doesn’t condone discrimination on its platform in any form, whether through algorithms or decisions made by its drivers. “We commend studies that try to better understand the impact of dynamic pricing so as to better serve communities more equitably,” a spokesperson told VentureBeat via email. “It’s important not to equate correlation for causation, and there may be a number of relevant factors that weren’t taken into account for this analysis, such as correlations with land-use/neighborhood patterns, trip purposes, time of day, and other effects. We look forward to seeing the final results.”

Via declined to provide a statement, and Lyft hadn’t responded at press time.

The researchers are careful to note that they don’t actually know how Uber, Lyft, and Via’s fare-pricing algorithms work because they’re proprietary, which makes proposing fixes or determining the harm they might cause a challenge. “It’s hard to say how pervasive this kind of bias is because other instances would need to be examined on a case by case basis,” Caliskan and Pandey continued. “Nevertheless, in general, whenever an algorithm is biased, over time the biases get amplified — in addition to perpetuating bias — and this might further disadvantage certain populations.”

Indeed, examples of this abound in AI and machine learning. A recent report suggests that automatic speech recognition systems developed by Apple, Amazon, Google, IBM, and Microsoft recognize black voices far less accurately than white voices. A study by the U.S. National Institutes of Standards and Technology found that many facial recognition algorithms falsely identified African American and Asian faces 10 to 100 times more often than Caucasian faces. Gender biases also exist in popular corpora used to train AI language models, according to researchers. And studies show that even ecommerce product recommendation algorithms can become prejudiced against certain groups if not tuned carefully.

Ride-sharing drivers themselves are subject to the whims of potentially biased algorithms. A study published in the journal Nature shows that small changes in a simulated city environment cause “large deviations” in drivers’ income distributions, leading to an unpredictable outcome that distributes vastly different payouts to identically performing drivers.

An obvious fix is open-sourcing the algorithms or incorporating fairness constraints, Caliskan and Pandey said. Beyond this, a 2018 study of bias in dynamic ride-sharing pricing suggests the financial incentive of higher “surge” prices can alleviate the extent to which bias exists. “There have been prior cases of bias caused by algorithmic dynamic pricing, and ride-hailing applications are now arguably one of the largest consumer-facing applications that use dynamic pricing,” they said. “On the surface, dynamic pricing is about supply and demand — but because of the way cities are often segregated by age, race, or income, this can lead to bias that is unintentionally split by neighborhood demographics.”