At SMX East I attended the “Solving Complex SEO Problems When Standard Fixes Don’t Apply” session with presenters Hannah Thorpe, Head of SEO Strategy at Found, and Arsen Rabinovitch, Founder and CEO at TopHatRank.com. Here are key learnings from each presentation.

Hannah Thorpe highlights Google changes and the user discovery experience

Hannah starts by sharing a slide with an SEO checklist used by Rand Fishkin in a past episode of Whiteboard Friday:

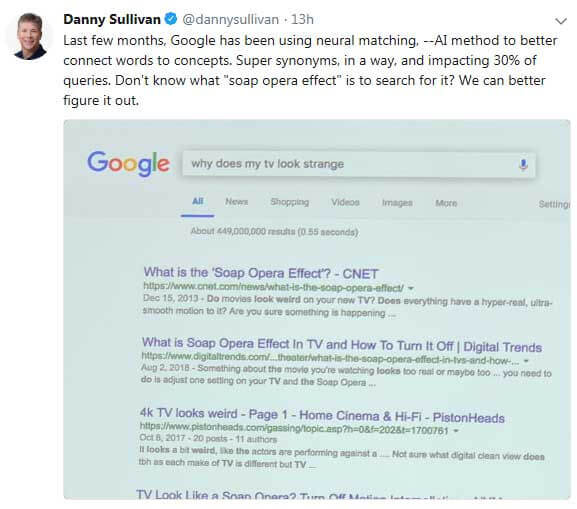

The problem is that a lot has changed since Rand published that Whiteboard Friday. One aspect of what has changed is the advent of neural matching. For example, according to Danny Sullivan, 30% of all queries use neural matching, as shown in this example query.

In this example, we see a user asking why their TV looks strange, matched up with the common label for that (the “soap opera effect”). This is an example of neural matching in action.

Another major change is that Google is becoming a discovery engine, where the focus is more on discovery than search. This is quite literally true in the case of the Google feed. Another significant change is the addition of the topic layer.

In this result, above the knowledge graph, you can see the most relevant sub-topics to the query result.

Another significant shift is driven by Google’s increased understanding of entities. This enables Google to do a far better job of finding the content that is most relevant to the user’s query, and in fact, their actual needs (Note: my view is that this is not a deprecation of links, but an improved methodology for determining relevance).

Hannah then provides us with a table comparing the differing natures of search and discovery.

As SEOs, we tend to optimize for search, not discovery, where the entity holds a lot of the value. With this in mind, we need to start thinking about other ways to approach optimization, as suggested by Rand Fishkin.

Hannah then recommends that we attack this new SEO landscape with a three-pronged approach:

- Technical Excellence

- Well-Structured Content

- Being the Best

Use your technical expertise to reevaluate your focus

The need for this is a given, but there are four causes of failure for audits:

- Sporadic implementation

- A changing ecosystem

- No change control process

- Too many people involved

How do you get better audits? It’s about your focus. Think about these things:

- Being actionable

- Prioritize time vs. impact

- Understand the individuals involved

- Quantify everything

Be smart about your structured content

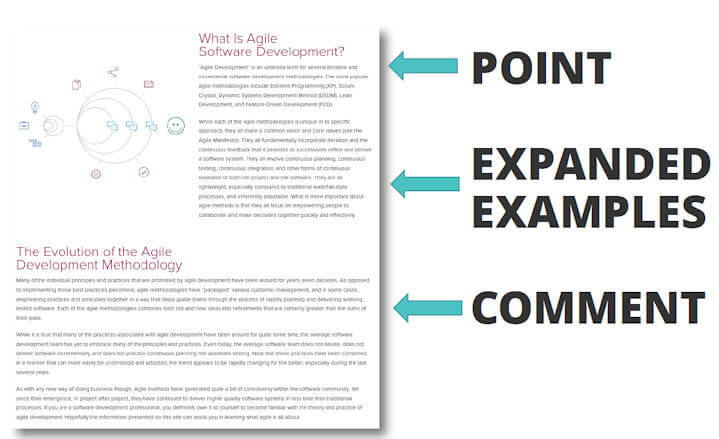

This is about making it easier for users to find what they want. Here’s a smart way to think about formatting your content:

Basically, you want to make the main point (answer the question) in the first four lines of the content. Expand upon that with examples, and then comment on that.

The specifics of how you format the content matters too. Some basic ideas on how to do this are:

- Avoid large blobs of text – keep paragraphs short

- Keep tables clean and avoid large chunks of text in them

- Avoid excessive text in bulleted lists, and don’t double space them either

- Add structured markup to your content

Be the best in your own space

This is critical to success in today’s world. It doesn’t mean that you need to be the best at everything, but carve out your space and then own it.

This starts by understanding where the user is on their journey when they reach your site. What are their needs at the moment that they arrive on your web page? Understand all the possible circumstances for visitors to your page, and then devise a strategy to address the best mix of those situations possible.

Remember, Google is focusing on users, not websites. You need to focus on your target users too.

=============================

Arsen Rabinovitch outlines how to evaluate SEO problems and what tools can get you back on track

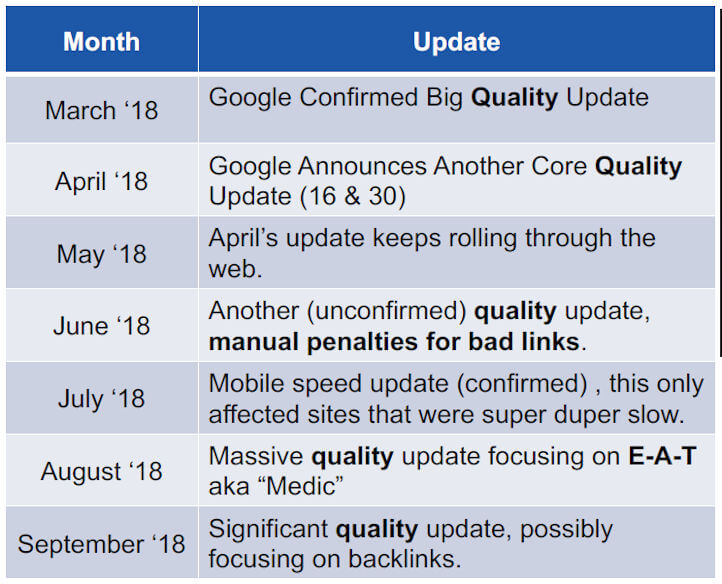

This was a big year for Google updates and that prompted changes in traffic, some significantly, for a lot of sites.

Here’s a short summary of the updates that occurred.

For some sites, the impact of this was quite large. You can see an example in this SEM Rush chart of one site that suffered two large drops during the year.

Once you have experienced significant traffic loss, it’s time for SEO triage. The first step is to look at the symptoms.

- We lost positions

- We lost organic traffic

- We lost conversions

It’s also helpful to create a timeline, as shown here.

Once this initial research is complete, it’s time to do some diagnostics. It’s helpful to start by ruling out as many potential causes as possible. Some of the things to check for are.

- Check for manual actions

- Look to see if the symptoms are environmental

- Check if the symptoms just started presenting themselves

- Determine if the symptoms are chronic

It’s also useful to see what’s being affected. Is it the entire site or just some pages? You should also check to see if competitors are affected as well.

Further digging can involve checking which queries lost clicks (or rankings). You can also use third-party tools such as SEMRush to confirm your findings. Make sure to also check the URL inspection reporting in the new GSC to see if Google is reporting any problems there. Check to see if the SERPs themselves changed. You can also check the index coverage report, and look for URLs that are indexed but not in sitemap.

Checking coverage reporting is also a good idea. Some things to look for there include:

- Look for large chunks of pages moving in or out

- Blocked by robots.txt

- Excluded by NoIndex tag

- Crawled – currently not indexed

- Alternate page with proper canonical tag

Search Console is truly a goldmine of diagnostics, and here are some more things you can look into:

- Check crawl stats

- Check blocked resources report

- Check html improvements report

Another rich area of information is your web server logs. These are files kept by your web hosting company that contain a ton of information in search and every visitor to your web site. Things to look for here include:

- Weird status codes

- Spider traps

- Performance issues

- Intermittent alternating status codes

Don’t forget to look at your backlinks, as you may spot problems there that impact the entire site. Use a crawler tool such as Screaming Frog or DeepCrawl to crawl. Identify and fix all the issues you find there, and look for pages that are not getting crawled by your crawler and find out why.

Arsen also shared a number of one slide guides that captures much of the above. You can go check those out in his presentation below.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.