Advanced technical SEO is not without its challenges, but luckily there are many tools in the market we can use. And by combining some of these tools not only can we address the challenges we face, we can create new solutions and take our SEO to the next level. In this guide I will be combining three distinct tools and utilize the power of a major cloud provider (Google Cloud), with a leading open source operating system and software (Ubuntu) and a crawl analysis tool (Screaming Frog SEO Spider).

Examples of solutions this powerful combination can bring to the table are:

- To create XML Sitemaps using daily scheduled crawls and automatically make these available publicly for search bots to use when crawling and indexing your website;

- To have your own personal in-house SEO dashboard from repeat crawls;

- To improve site speed for users and search bots by priming CDNs from different location by crawling your most important pages regularly;

- To run crawls fast and in parallel from a stable connection in the cloud, instead of hogging memory and bandwidth on your local computer.

Combined with SEO expertise and a deep understanding of data this and so much more can be achieved.

Both Google Cloud and Screaming Frog have further improved a lot in the last few years and so here is the updated, much shorter and easier guide to run one or multiple instances of Screaming Frog SEO Spider parallel in the Google Cloud or on your own Virtual Private Server (VPS).

Quick start

Assuming you already know how to use Linux and have a remote Ubuntu 18.04 LTS instance with enough resources running somewhere, e.g. Google Cloud, and you just want to download, install and/or update Screaming Frog SEO Spider on the remote instance in a jiffy then you can skip most of this guide by just logging into the remote instance and issue the following one-line command in the terminal on the remote instance:

wget https://seo.tl/wayd -O install.sh && chmod +x install.sh && ./install.sh

If this does not work, or to better understand how to setup the remote instance, transfer data, schedule crawls and keeping your crawl running when you are not logged into the remote instance, continue reading.

Dependencies

Before this guide continues, there are a few points that need to be addressed first.

First, the commands in this guide are written as if your primary local operating system is a Linux distribution. However, most of the commands work locally the same with maybe minor tweaks on Windows and/or macOS. In case of doubt, or if you want to install Linux locally when you are on Windows, you can install different versions of Linux for free from the official Windows Store, for example Ubuntu 18.04 LTS. It is very useful if you have some experience and knowledge on how to access your terminal/command line interface on your operating system.

Second, you will need a Google Cloud account, enable billing on this account, create a Google Cloud project and install the gcloud command line tool locally on your Linux, macOS or Windows operating system. If you created a new Google Cloud project for this guide, it can help to visit the Google Compute Engine overview page of your project in a web browser to automatically enable all necessary APIs to perform the tasks below. Be mindful, running Screaming Frog SEO Spider in the cloud will cost money – use budget alerts to notify you when cost arise above expectations.

Alternatively, if you have a Ubuntu 18.04 LTS based VPS somewhere or an Amazon AWS or Azure account you can use this too for this guide. The commands for creating an instance are different and depend on the cloud provider you are using, but the overall principles are the same. To better enable you to use this guide independent of any cloud provider I included generic how to connect instructions below to get you started.

Third, you will need to have a valid and active Screaming Frog SEO Spider license.

Lastly, and this is optional, if you have a specific crawl configuration you want to use with Screaming Frog SEO Spider you need to install Screaming Frog SEO Spider locally, configure it and export the configuration settings as a new file, hereafter referred to as:

default.seospiderconfig

Assuming the points above are all checked, you can proceed and set up one or multiple separate instances to crawl with Screaming Frog SEO Spider remotely and parallel in the cloud using this guide.

Setting up the Google Compute Engine instances

First, go to the terminal/command line interface (hereafter referred to as terminal) on your local computer and navigate to the folder you want to work from (e.g. store all the crawls).

Next, identify the project id of your Google Cloud project and choose a Google Compute Engine zone. You will need this going forward.

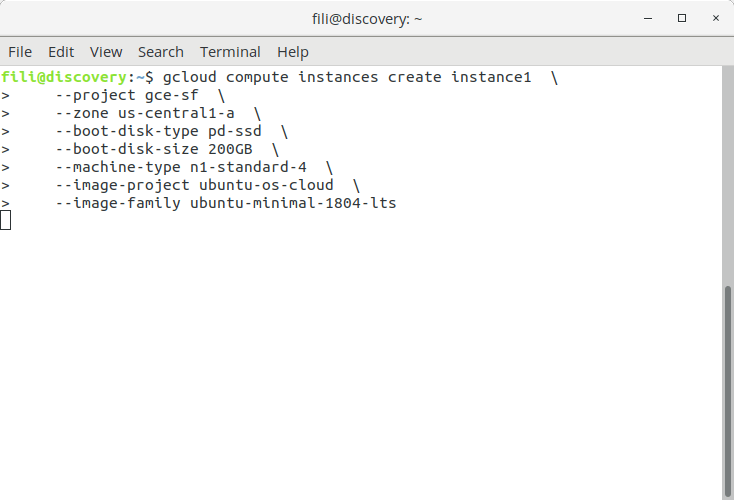

Issue the following command in the terminal to create a remote Google Compute Engine instance in the Google Cloud:

gcloud compute instances create <NAME>

–project <PROJECT_ID>

–zone <ZONE>

–boot-disk-type pd-ssd

–boot-disk-size 200GB

–machine-type n1-standard-4

–image-project ubuntu-os-cloud

–image-family ubuntu-minimal-1804-lts

Replace <NAME> with any name, for the purpose of this guide I will go with “instance1”.

Replace <PROJECT_ID> with the project id from your Google Cloud project, for the purpose of this guide I will go with “gce-sf”.

And replace <ZONE> with the chosen zone, for the purpose of this guide I will go with “us-central1-a”.

Now the command looks like:

gcloud compute instances create instance1

–project gce-sf

–zone us-central1-a

–boot-disk-type pd-ssd

–boot-disk-size 200GB

–machine-type n1-standard-4

–image-project ubuntu-os-cloud

–image-family ubuntu-minimal-1804-lts

This will create a remote instance on Google Compute Engine, with a 200GB SSD hard disk, 4 vCPUs, 15GB RAM and using Ubuntu 18.04 LTS minimal as operating system. If you like you can change the size for the hard disk or replace the SSD with a classic hard disk (at least 60GB) or change the number of CPUs and RAM by choosing another machine type.

Creating a new instance on Google Compute Engine using the gcloud command line tool.

Copying configuration settings (optional)

Now that the remote instance is created, now is a good time to transfer the Screaming Frog SEO Spider configuration file locally stored on our computer to the remote instance. Issue the following command in the terminal on the local computer:

gcloud compute scp

<FILENAME>

<NAME>:~/

–project <PROJECT_ID>

–zone <ZONE>

Again replace <NAME>, <PROJECT_ID> and <ZONE> with the same values as the previous step. Next also replace <FILENAME> with the name of the configuration file loclaly, which for the purpose of this guide is named “default.seospiderconfig”. Now the command looks like:

gcloud compute scp

default.seospiderconfig

instance1:~/

–project gce-sf

–zone us-central1-a

You can skip this step if you are just testing this guide or if you want to use the default Screaming Frog SEO Spider configuration without any changes.

Command for transferring the Screaming Frog SEO Spider config file to Google Compute Engine instance.

Connect to the remote instance

Now that our remote instance is running, connect to it and configure it for our Screaming Frog SEO Spider installation. Issue the following command in the terminal on your local computer:

gcloud compute ssh

<NAME>

–project <PROJECT_ID>

–zone <ZONE>

Again replace <NAME>, <PROJECT_ID> and <ZONE> with the same values as in the previous steps. Now the command looks like:

gcloud compute ssh

instance1

–project gce-sf

–zone us-central1-a

This is how you reconnect to the remote instance whenever you are disconnected.

Command for connecting to the Google Compute Engine instance.

Alternative VPS or cloud hosting (AWS/Azure)

The steps above are specific for Google Cloud as they utilize the Google Cloud command line tool gcloud. If you like to use another cloud provider such as Amazon AWS or Microsoft Azure, you can use their command line tools and documentation to set up your instances.

If your plan to use a different VPS that can work just as fine. Issue the following command in the terminal to make a secure connection to the VPS:

ssh <USERNAME>@<IP_ADDRESS_OR_HOSTNAME>

Replace <USERNAME> and <IP_ADDRESS_OR_HOSTNAME> with the settings of the VPS. Now the command looks like:

And issue the following command in the terminal to copy the configuration file to the VPS:

scp <CONFIG_FILE> <USERNAME>@<IP_ADDRESS_OR_HOSTNAME>:~/

Replace <CONFIG_FILE>, <USERNAME> and <IP_ADDRESS_OR_HOSTNAME> based on the name of the config file and the settings of your VPS. Now the command looks like:

scp default.seospiderconfig [email protected]:~/

Just make sure that for the purpose of this guide and the installation script the following requirements are met:

- The operating system is Ubuntu 18.04 LTS (recommended) or higher.

- The instance has at least 8 GB RAM and 1 CPU.

- The instance has at least 100 GB hard disk partitioned on the home folder.

Connect to the remote instance and let’s continue to set up Screaming Frog SEO Spider.

Installing Screaming Frog SEO Spider on the remote instance

Now that you are connected in the previous steps to the remote instance in the terminal, the next step is to download and run the installation script. My previous guide was approximately 6 thousand words, most of which were dedicated to installing the software and setting up a graphic interface to manage the remote Screaming Frog SEO Spider instance. In this guide most of that is replaced with just one line which does all the heavy lifting for you, however for this reason it is important to meet the requirements listed above or the software installation and configuration may fail.

To download and run the installation script issue the following command in the terminal on the remote instance:

wget https://seo.tl/wayd -O install.sh && chmod +x install.sh && ./install.sh

Wait for about 5 minutes (sometimes it may take up to 10 minutes) until all installation steps have completed and you see the success message. The script will start by asking you for:

- Your Screaming Frog SEO Spider license username and license key (for testing purposes you can fill out anything here, but in the next steps Screaming Frog SEO Spider will not run if the license details are not valid;

- If you want to change the defaults for the Screaming Frog SEO Spider memory allocation file (default is 50GB);

- And if you want to utilize the database storage mode (default is no).

If you have any problems, rerun the command.

Command for installing Screaming Frog SEO Spider on Google Compute Engine (Google Cloud).

For the purpose of this guide, let’s also create a subdirectory “crawl-data” in the home folder of the current user on the remote instance to save all the crawl data into, issue the following command:

mkdir crawl-data

Using tmux on the remote instance

Now that Screaming Frog SEO Spider is installed, let’s run it on the remote instance from the terminal. To make sure the loss of the connection to the remote instance doesn’t stop a crawl it is prudent to issue the command to run Screaming Frog SEO Spider independently from the connection to the remote instance.

The installation script also installed a widely used command line tool for this purpose, called tmux. You can find guides and documentation to become a tmux wizard here, here and here. To start tmux issue the following command in the terminal on the remote instance:

tmux

This creates a terminal session independent from your connection to the remote instance. Now if you want to disconnect from this session, you can issue the following command to detach from within the tmux terminal session:

tmux detach

Or, when for example Screaming Frog SEO Spider is crawling, type on the keyboard Ctrl-b and then the letter d.

If you want to reconnect to the tmux terminal session, for example when you log into the remote instance a few hours later again, issue the following command to connect to the tmux terminal session:

tmux attach -t 0

The zero in the command above refers to the first active tmux session. There may be more, especially if you accidentally ran the command tmux several times without using “attach” or if you run multiple Screaming Frog SEO Spider crawls on the same remote instance – yes, this is very easy to do with tmux but RAM, SWAP and CPU may be an issue. You can find out all active tmux sessions with issuing the following command:

tmux list-sessions

And then open (using “tmux attach -t <NUMBER>”) each tmux terminal session, and then disconnect (“tmux detach”), to see the status what tmux is doing in each terminal session.

Running Screaming Frog SEO Spider on the remote instance

Now that you understand how to use tmux, open an unused tmux terminal session and issue the following command in the tmux terminal session on the remote instance:

screamingfrogseospider

–crawl https://example.com/

–headless

–save-crawl

–output-folder ~/crawl-data/

–timestamped-output

–create-sitemap

This command is only for testing that Screaming Frog SEO Spider is set up and working as expected. To see the result of the test crawl, issue the following command:

ls -al

If all went well, a new subfolder in the crawl-data folder has been created with a timestamp as its name. This folder contains the data saved from the crawl, in this case a sitemap.xml and a crawl.seospider file from Screaming Frog SEO Spider which allows us to load it in any other Screaming Frog SEO Spider instance on any other computer.

Command for running Screaming Frog SEO Spider on Google Compute Engine (Google Cloud).

You can configure your crawls to use the configuration file mentioned above or to crawl a dedicated list of URLs. To learn more about the command line options to run and configure Screaming Frog SEO Spider on the command line, check out the documentation and/or issue the following command in the terminal on the remote instance:

screamingfrogseospider –help

Downloading the crawl data

Now that the crawling has been tested, let’s make sure that the crawl data collected is not lost and can be used. There are several options to get access to the crawl data. For the purpose of this guide the crawl data is stored in a subdirectory, named by timestamp, in the directory crawl-data which is located in the home folder of the user, e.g.

~/crawl-data/2019.06.01.12.30.13/

Direct transfer

The first option is to transfer the data from the remote instance to the local computer issuing the following command in the terminal on the local computer:

gcloud compute scp

<NAME>:<DIRECTORY_NAME> .

–recurse

–project <PROJECT_ID>

–zone <ZONE>

Again replace <NAME>, <PROJECT_ID> and <ZONE> with the same values as in the previous steps. Now the command looks like:

gcloud compute scp

instance1:~/crawl-data/* .

–recurse

–project gce-sf

–zone us-central1-a

Alternatively if when using another cloud provider or a VPS, to transfer the files to the current working directory using a secure connection issue the following command in the terminal on the local computer:

scp -r <USERNAME>@<IP_ADDRESS_OR_HOSTNAME>:~/<DIRECTORY_NAME> .

Replace <DIRECTORY_NAME>, <USERNAME> and <IP_ADDRESS_OR_HOSTNAME> based on the name of the directory and the settings of your VPS. Now the command looks like:

scp -r [email protected]:~/crawl-data/ .

Although, command line tools from other cloud providers may have different commands to accomplish the same.

Store in the cloud

The second option is to back up the crawl data to the Google Cloud in a Google Cloud Storage bucket. To make this happen, create a new storage bucket by issuing the following command (in either the terminal on the local computer or remote instance):

gsutil mb -p <PROJECT_ID> gs://<BUCKET_NAME>/

Replace <PROJECT_ID> with the Google Cloud project id and use any name for the <BUCKET_NAME>. The bucket name can be any name, however many are unavailable so it may take a tries before you get one that works for you. Now the command looks like:

gsutil mb -p gce-sf gs://sf-crawl-data/

Transfer the whole directory with the crawl data, including all subdirectories, from the remote instance to the storage bucket by issuing the following command in the terminal on the remote instance:

gsutil cp -r <DIRECTORY_NAME> gs://<BUCKET_NAME>/

Replace <DIRECTORY_NAME> with the directory to transfer from the remote instance to the storage bucket and the <BUCKET_NAME> with the name of the storage bucket. Now the command looks like:

gsutil cp -r crawl-data gs://sf-crawl-data/

Now that the data is safely backed up in a Google Cloud Storage bucket, it opens up a number of exciting opportunities for SEO. For example, if a sitemap export from the crawl is stored in the Google Cloud Storage bucket, you can also make this public so it can act as the daily generated XML Sitemap for your website and reference it from your robots.txt file. In addition, you can now also choose to utilize Google Bigquery to import the exported CSV reports from Screaming Frog SEO Spider and use Google Data Studio to display the data in meaningful graphs. This and more is possible now that the data is accessible in the cloud.

Updating Screaming Frog SEO Spider on the remote instance

If you want to update Screaming Frog SEO Spider or something went wrong during the installation and you want to try it again, just connect to the remote instance and issue the following command in the terminal on the remote instance:

wget https://seo.tl/wayd -O install.sh && chmod +x install.sh && ./install.sh

When running the installation script again, Screaming Frog SEO Spider will be automatically updated to its latest release.

Running multiple instances

You can repeat the steps above to create as many instances you want, install Screaming Frog SEO Spider and to run multiple different crawls parallel to each other. For example, I often run multiple remote instances parallel at the same time, crawling different URLs.

Scheduling repeat crawls

Now that the remote instance is running, it is possible to schedule regular crawls using cron. First decide how often the regular crawl needs to run? If you are unfamiliar with cron schedule expressions, try out this handy tool. For the purpose of this guide the scheduled crawl will run 1 minute after midnight and then every following 12 hours.

To proceed cron needs be configured by issuing the following command in the terminal on the remote instance:

crontab -e

This will open a terminal editor nano which was also installed using the installation script above. Once inside the nano editor, add the following line to crontab:

<CRON_SCHEDULE_EXPRESSIONS> <COMMAND> >> <LOG_FILE>

For debugging purposes it is strongly recommended to keep the output of the crawl logged somewhere. The easiest way to do this is to store and append the runtime data into plain text file, which for the purpose of this guide is called:

cron-output.txt

Replace <COMMAND> and <LOG_FILE> with the actual command to run Screaming Frog SEO Spider (on one line). Now the line to add to crontab looks like:

1 */12 * * * screamingfrogseospider –crawl https://example.com/ –headless –save-crawl –output-folder ~/crawl-data/ –timestamped-output –create-sitemap >> ~/cron-output.txt

This process can be repeated for transferring the crawl data to the Google Cloud Storage bucket on a regular basis by adding the following line to crontab:

46 11/12 * * * gsutil cp -r crawl-data gs://sf-crawl-data/ >> ~/cron-output.txt

This line copies the crawl-data directory in full to the storage bucket, 15 minutes before the next scheduled crawl starts. Assuming only the latest crawl needs to be transferred, the easiest way of accomplishing this is by automatically delete all past crawl data when configuring the scheduled transfer by adding the following line in crontab instead:

46 11/12 * * * gsutil cp -r crawl-data gs://sf-crawl-data/ && rm -rf ~/crawl-data/* >> ~/cron-output.txt

Adding the two lines, one for running the scheduled crawl and one for scheduled transfer and deletion is useful. However it is more efficient to combine these three separate commands into one line in cron, each dependent on successful completion of each other (in other words, if one fails the commands afterwards will not be executed), which looks like:

1 */12 * * * screamingfrogseospider –crawl https://example.com/ –headless –save-crawl –output-folder ~/crawl-data/ –timestamped-output –create-sitemap && gsutil cp -r crawl-data gs://sf-crawl-data/ && rm -rf ~/crawl-data/* >> ~/cron-output.txt

If this gets too complicated it is also possible to learn the amazing world of shell scripts, write your own shell script and put the separate commands in there and then execute the shell script using cron.

To close and save the new cron job, use the keyboard shortcut Ctrl-X and confirm saving the new settings. To become a wizard in using cron, start here.

Cron scheduling Screaming Frog SEO Spider on Google Compute Engine.

Deleting the remote instances and storage bucket

To avoid accruing additional costs when you are not using the remote instance to crawl (so when you don’t have repeat crawls scheduled and you are done crawling), it is important to delete the instance. Keeping it running can be costly in the long term. To delete your instances, issue the following command in the terminal on your local computer:

gcloud compute instances delete

<NAME>

–project <PROJECT_ID>

–zone <ZONE>

Again replace <NAME>, <PROJECT_ID>, <ZONE> with the same values as in the previous steps. Now the command looks like:

gcloud compute instances delete

instance1

–project gce-sf

–zone us-central1-a

To delete multiple instances in one-go, just add the different instance names to the command like this:

gcloud compute instances delete

instance1 instance2 instance3

–project gce-sf

–zone us-central1-a

Warning: when you delete the remote instance, all data stored on the remote instance is also deleted and can not be recovered later. Be sure to transfer all data from the remote instances to your local computer or a Google Cloud Storage bucket before using delete-instances command.

In addition, you can delete the Google Cloud Storage bucket by issuing the following command in the terminal:

gsutil rm -r gs://<BUCKET_NAME>/

Replace <BUCKET_NAME> with the chosen name for the bucket. Now the command looks like:

gsutil rm -r gs://sf-crawl-data/

Final thoughts

I want to close on a cautionary note, and that is to keep an eye on the cost of the remote instances and data stored in your cloud bucket. Running Screaming Frog SEO Spider in the cloud does not need to cost much, but lose your eye on the ball and it can be costly.

I also highly recommend reading the different documentation and manuals for the different programs mentioned in this guide, as you will find that many adaptations of the commands used in this guide are possible and may make more sense for your situation.

Happy crawling!

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.